|

|

|

|

|

Cutting-Edge CNN Approaches for Breast Histopathological Classification: The Impact of Spatial Attention Mechanisms

Alaa Hussein Abdulaal

1![]()

![]() ,

Riyam Ali Yassin 2

,

Riyam Ali Yassin 2![]()

![]() , Morteza Valizadeh 2

, Morteza Valizadeh 2![]()

![]() ,

Ali H. Abdulwahhab 3

,

Ali H. Abdulwahhab 3![]()

![]() , Ali

M. Jasim 4

, Ali

M. Jasim 4![]()

![]() ,

Ali Jasim Mohammed 5

,

Ali Jasim Mohammed 5![]()

![]() ,

Hussein Jumma Jabir 5

,

Hussein Jumma Jabir 5![]()

![]() ,

Baraa M. Albaker 5

,

Baraa M. Albaker 5![]()

![]() ,

Nooruldeen Haider Dheyaa 6

,

Nooruldeen Haider Dheyaa 6![]()

![]() ,, Mehdi Chehel

Amirani 7

,, Mehdi Chehel

Amirani 7![]()

![]()

1 Department of Electrical Engineering, Al-Iraqia University, Baghdad, Iraq

2 Department of Electrical Engineering, Urmia University, West Azerbaijan, Iran

3 Department of Electrical and Computer Engineering, Altinbas University, Istanbul, Turkey

4 Department of Network Engineering, Al-Iraqia University, Baghdad, Iraq

5 Department of Electrical Engineering, Al-Iraqia University, Baghdad, Iraq

6 Department of Communication Engineering, Kashan University, Kashan, Iran

7 Department of Electrical Engineering, Urmia University, West Azerbaijan, Iran

|

|

ABSTRACT |

||

|

This paper

investigates advanced techniques for breast Histopathological Classification

using two robust convolutional neural network (CNN) architectures: Inception

V3 and VGG19. Spatial attention mechanisms are integrated to enhance the

models' capability to focus on crucial regions within histology images. These

enhancements improve diagnostic accuracy by allowing the models to

concentrate on critical features for accurate detection. The research

leverages two prominent datasets, BACH for multiclass classification and BreaKHis for binary classification, which provides

extensive collections of breast cancer histology images, enabling thorough

training and evaluation of the proposed models. InceptionV3 with spatial

attention mechanism achieved an accuracy of 99.73% for binary classification

and 99.06% for multiclass classification. Integrating spatial attention

mechanisms is anticipated to significantly advance the development of

automated breast cancer detection systems, offering potential improvements in

early diagnosis and treatment planning. This study demonstrates how combining

state-of-the-art CNN architectures with attention mechanisms can

significantly improve medical image analysis, ultimately contributing to

better patient outcomes. |

|||

|

Received 23 July 2024 Accepted 30 August 2024 Published 05 October 2024 Corresponding Author Alaa

Hussein Abdulaal, Engineeralaahussein@gmail.com

DOI 10.29121/ShodhAI.v1.i1.2024.14 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2024 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Breast Cancer, CAD, Convolutional Neural Network,

Spatial Attention Mechanisms, Histopathological Images |

|||

1. INTRODUCTION

Breast cancer is one of the leading neoplasms affecting women, and it greatly affects general physical health and quality of life, including psychological well-being. According to the World Health Organization, breast cancer is estimated to be the leading cause of cancer deaths in women worldwide, with millions of new cases diagnosed yearly. In Indonesia, breast cancer is the most common malignancy in females and results in significant mortality rates every year. The major causes of death in these cases include late diagnosis because of poor access to appropriate, reliable diagnostic techniques Giaquinto et al. (2022). Many improvements in the methods of diagnosing breast cancer have been carried out over the last decades using a variety of strategies aimed at early detection. Some techniques used include mammography, ultrasound, biopsy, and MRI. Mammography is usually the most applied, where an X-ray is used to detect masses or lumps in the breasts. The drawback of this technique is that it usually has limited detection of cancer in women with dense breast tissue Elmore et al. (2015). Ultrasonography is generally undertaken to supplement mammography, especially to establish any lumps detected during mammography. The biopsy encompasses the excision of tissues from any suspicious area and their observation under the microscope for cancerous cells. MRI: Imaging using magnetic fields and radio waves aids in obtaining clear pictures of the breast and is very frequently used in complicated cases or cases of females with a very high predisposition to breast cancer Jasti et al. (2022). Histopathology refers to the study of tissue changes at the microscopic level due to some disease process. Histopathology is essential in the diagnosis of breast cancer because it takes priority in identifying and classifying cancerous cells by their morphology. The application of histopathology in diagnosis will help obtain detailed information concerning the variety and grade of the cancer, which proves very helpful in reaching proper treatment recommendations. Histopathological examination is conventionally done by taking the tissue sample from a biopsy; a pathologist can only view its results Shen et al. (2019). Breast cancer can be classified into many types according to histopathological appearance. Some of the main types of breast cancer include ductal carcinoma in situ (DCIS), lobular carcinoma in situ (LCIS), invasive ductal carcinoma (IDC), and invasive lobular carcinoma (ILC). DCIS is the classical non-invasive type of in situ breast cancer, meaning a containment of cancerous cells within mammary ducts themselves but with no extension beyond. LCIS is also a non-invasive form wherein a few abnormal cells line the mammary lobules but have not spread. IDC is the most common invasive type of cancer, where cancerous cells extend beyond mammary ducts into surrounding tissue. ILC refers to the invasion of cancerous cells in the mammary lobules, spreading to other tissues Liu et al. (2019). Apart from the above, cancer in general of the breast can be benign or malignant. The benign tumors are non-cancerous and, as a rule, do not migrate to other parts of the body. These include fibroadenomas and cysts. However, although most other lesions are not harmful, some have a significant share in causing the tumor to grow considerably, possibly causing discomfort or cosmetic effects. Malignant tumors, such as IDC and ILC, are carcinogenic, and the possibility of metastasis—that is, dissemination to other parts of the body if left unattended—may lead to grave complications and death Moscalu et al. (2023).

Indeed, computer-aided diagnosis (CAD) has revolutionized physicians' approaches to detecting the presence of breast cancer. The system assists radiologists in pinpointing conspicuous points in medical images, including mammograms, which, on all accounts, are suspicious and may need further investigation. This CAD system was developed to reduce human errors, so it increases diagnostic accuracy, which is essential in determining the incidence of breast cancer at an early stage. Modernization of CAD will include machine learning and deep learning algorithms to ensure the precise interpretation of medical images McKinney et al. (2020)

Deep learning (DL) is a sub-field of machine learning (ML) and a broad domain involving territory. This technique monitors data with multi-layered artificial neural networks in its arrangement. Again, deep-learning algorithms have been developed to assess histopathological images for breast cancer diagnosis and to classify them with high accuracy. Instead, the model needs to be trained on a large dataset of histopathological images annotated by pathologists. In this way, the system learns patterns and characteristics indicative of breast cancer Litjens et al. (2017). One of the significant benefits of applying deep learning approaches to histopathological image analysis is that it independently extracts relevant features from images without any human intervention Jadoon et al. (2023). The use of deep learning in breast cancer diagnosis will go a long way in improving diagnostic accuracy and speed, reducing costs, and increasing access to good-quality health services in resource-poor settings. Indeed, recent studies looked into the fact that deep-learning algorithms are likely to surpass the accuracy of human pathologists in diagnosing or failing to detect breast cancer from histopathology images Raaj, R. S. (2023). Aside from this, deep learning may help alleviate the workload of pathologists by tools processing large amounts of images quickly and efficiently Huang et al. (2019). Finally, some of the key benefits of deep learning methods for diagnosing breast cancer based on histopathology images are the following: Apart from its advantages in diagnostic performance, it may provide personalized treatment because more detailed information will be delivered about the characteristics of individual patients with cancer.

2. Related Work

In wide studies, there have been some relevant problems in the development of automatic breast cancer classification, using a DL-based method on histopathological images to enhance the diagnosis of this kind of cancer. The general approaches to classify breast histopathological images consider binary or multi-class classifications. Binary classification distinguishes between benign and malignant tumors. On the other hand, multi-class classification, which classifies images based on different tumor subtypes, presents a more significant challenge than binary classification. Aldakhil et al. (2024) present the idea of an ECSAnet applied on top of EfficientNetV2 and, in addition, a Convolution Block Attention Module (CBAM) is included with several full connection layers to improve the accuracy of histopathology images further. ECSAnet is trained over the BreaKHis dataset and Reinhard's blot normalization and image augmentation techniques to reduce overfitting and increase generalization. Test results show that ECSAnet provides better performance than AlexNet, DenseNet121, EfficientNetV2-S, InceptionNetV3, ResNet50, and VGG16, attaining an accuracy of 94.2% at 40× magnification, 92.96% at 100× magnification, 88.41% at 200× magnification, and 89.42% at 400× magnification. Ritesh et al. (2024). proposed the 'FCCS-Net' method as a full convolutional attention transfer learning-based method for breast cancer classification. A fully convolutional attention mechanism is. FCCS-Net uses a pre-trained ResNet18 with convolutional attention at various levels using additional residual connections. The performance of FCCS-Net was tested on 'BreaKHis,' 'IDC,' and 'BACH' public datasets, and its classification accuracy is very high: 99.25%, 98.32%, 99.50%, and 96.98% for optical magnification of 40X, 100X, 200X, and 400X in the BreaKHis dataset; 90.58% for IDC dataset in 40X magnification; and an average accuracy of 91.25% in the BACH dataset. On the other hand, Das et al. (2024) proposed a possible solution to improve the accuracy and efficiency of breast cancer diagnosis through the Enhanced CBAM Collaborative Network. In this model, the DenseNet121 is pre-trained with the Convolution Block Attention Module for benign and malignant classification of histology images. The parallel branch uses Inception-ResNet-v2 with CBAM for feature extraction, optimized by the feature pooling module. This approach has reached a level of high accuracy in binary classification in the BreaKHis dataset, with values of 98.33%, 98.08%, 99.67%, and 97.80% for magnification factors of 40x, 100x, 200x, and 400x, respectively, and an overall accuracy of 98.31%, with a multiclassification accuracy of 90.67% in the BACH dataset. Balasubramanian et al. (2024) have proposed a way to analyze breast cancer histopathological images using deep learning techniques in an ensemble-based approach. That ensemble comprises the VGG16 and ResNet50 architecture, designed for image classification on the BACH dataset, and VGG16, ResNet34, and ResNet50 on the BreaKHis dataset. This work introduces novel preprocessing techniques that enable analysis in high-resolution images of focused areas. The results obtained a classification accuracy of 95.31% on the BACH dataset and 98.43% on the BreaKHis dataset, indicating that the ensemble strategy effectively improves the classification rate. Guo et al. (2024) explained using a multi-scale bar convolution pooling structure with patch attention to classify breast histopathology images. This utilized modified DenseNet for BCPTI in an optimized way to extract features that include extended pathological features with spatial and channel weighting attention. This model was implemented on the 'BreaKHis' dataset for binary classification and provided 99.88% accuracy for multi-class classification and 97.62% for binary classification. Rahman et al. (2024) proposed another framework for classifying breast cancer histopathology images, named ADBNet. ADBNet is designed with the help of a modified convolutional block attention module to emphasize essential features and solve model interpretation problems. This methodology extracts important characteristics from histopathological images through CNNs with various magnification factors. Testing results have shown that this model attains excellent levels of accuracy under different zoom factors: 40×. ADBNet also did comparatively well in terms of accuracy of classifying patients, that is, 99.05% for 40×, 99.15% for 100×, 99.03% for 200×, and 98.60% for 400×. Liu et al. (2024) have proposed the artificial neural network architecture model CTransNet for multi-subtype classification of histopathology breast cancer images. The newly proposed model integrates pre-trained DenseNet, transfer learning branch, residual collaborative branch, and feature fusion module. CTransNet is a feature fusion optimized strategy that improves the coordination of extracting target image features. The testing on dataset 'BreaKHis' indicated classification accuracy reaching 98.29%. Xu (2023).proposed using the artificial neural network model based on CNNs called MDFF-Net for categorizing breast cancer histopathology images. MDFF-Net comprises a one-dimensional feature extraction network, a two-dimensional feature extraction network, and a feature fusion classification network. To enhance accuracy, this network contains multi-scale channel mixing modules with a channel attention module. This model also incorporates a one-dimensional feature extraction network for handling the information disparity in the diagnosis process. Regarding test performances on the 'BreaKHis' and 'BACH' datasets, MDFF-Net yields accuracies of 98.86% and 86.25%, respectively. Kausar et al. (2023)designed a lightweight CNN model for breast cancer histopathology images to detect all the subtypes. This is built upon wavelet transform-based image decomposition into various frequency bands, and only the low-frequency bands are processed using an LWCNN model designed with an inverse residual block module. The design was tested on the ICIAR 2018, BreaKHis, and Bracs datasets to achieve accuracies of 96.2%, 99.8%, and 72.2%, respectively, with inference times of 0.67 s and 0.21 s per image. Zou et al. (2021) proposed a new method of AHoNet for classification tasks in breast histopathological images, embedding cross-channel attention mechanisms into high-level statistics using profound and discriminative feature representations. Here, an attention module is used to mine salient local features from histopathology images, and matrix normalization is used to enhance the global representation further. Our proposed AHOnet achieved optimal classification accuracy of 99.29% on the BreaKHis dataset and 85% on the BACH dataset. The current work in this paper introduced a significant advancement in histopathological image analysis for breast tumor classification, thus revealing great potential in clinical diagnosis. Ukwuoma et al. (2022) developed another approach to classifying breast histopathological images using Multiple Self-Attention Heads through the invention of DEEP_Patch. DEEP_ Patch fuses DenseNet201 with VGG16 to extract global and local features that are helpful in proper classification. Various self-attention heads extract spatial information, identifying the region necessary for classification. The evaluation made on the public datasets, BreaKHis and ICIAR 2018 Challenge, showed a score of 1.0 for the benign class and 0.99 for the malignant class in the BreaKHis dataset and an accuracy rate of 1.0 in the ICIAR 2018 Challenge. This work demonstrates the benefits DEEP_Patch can bring to histopathology image processing and interpretation, which can be applied to clinical diagnostics, hence the current challenges. A new approach has been presented by Zou et al, (2021)through the use of image processing techniques for better pathological diagnosis of breast cancer. The two large modules will work together, making efficient production with 100% correct diagnoses: the ADSVM-based Support Vector Machine technique for anomaly detections and the Adaptive Resolution Net model for variable resolution and adjustment of subblock depth on a difficulty-level basis for classification of images. Experimental evaluation was conducted against public datasets, and our classifier presents a new binary classification accuracy of up to 98.83% at an image level of 200× for BreaKHis, plus a binary classification accuracy of up to 99.25% at an image level for BACH 2018.

This study uses two robust CNN architectures, Inception V3 and VGG19, for breast cancer classification. These models are further enhanced with spatial attention mechanisms to improve their ability to focus on critical regions within histology images. Two well-known datasets were used in this paper: BACH and BreaKHis. They provided the tremendous, consolidated data of histology images for training and evaluation. In this work, the 5-fold cross-validation protocol is applied to robustness and assures the reliability performance of the model. It is perceived that the integration of spatial attention mechanisms with Inception V3 and VGG19 may pave the way for sizeable diagnostic accuracy in the development of automatic systems for breast cancer detection. This paper involves data preprocessing, model training, evaluation, and details of the discussion on results. The application of the current study presented in this paper will explain how these sophisticated deep learning techniques could further optimize the already entirely accurate diagnosis process of breast cancer for better patient care and improved outcomes.

3. DATASETS

3.1. BACH DATASET

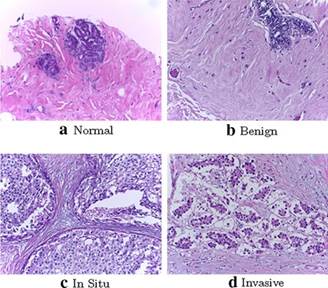

The BACH (Breast Cancer Histology) dataset consists of high-resolution microscopic images; the dataset comprises images derived from breast tissue specimens categorized into four groups: normal tissue, benign lesions, in situ carcinoma, and invasive carcinoma Araújo et al. (2017). These were collected from multiple medical institutions with annotations by expert pathologists and provided a wealthy and reliable training and evaluation ground truth. Nevertheless, this dataset is still pertinent for developing and benchmarking automated systems for breast cancer detection. Normalization, stain normalizing, and data augmentation are the preprocessing steps for this dataset. Figure 1 Samples of BACH labels dataset.

Figure 1

|

Figure 1 BACH Dataset |

3.2. BREAKHIS DATASET

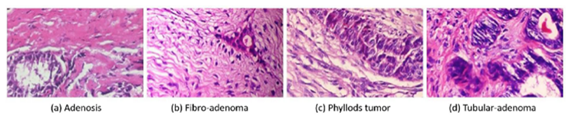

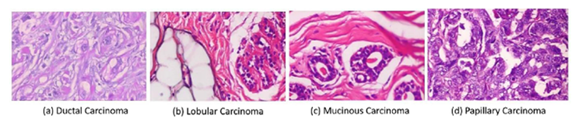

The BreaKHis dataset contains 7,909 microscopic images of histopathological sections from the breast tumor tissue of 82 patients into the classes of both benign and malignant with further subclasses Spanhol et al. (2016). Images have been taken at three magnification levels: 40x, 100x, 200x, and 400x, as shown in Table 1 This dataset is furnished by the Laboratory of Pathological Anatomy and Cytopathology, Brazil, to promote the diagnostic capability in a study concerning the variation of classification performance with magnification. Variability in staining is due to different qualities and class imbalances. Pre-processing involves resizing, data augmentation, and stain normalization. Figure 2 and Figure 3 show the various label types of the BreaKHis dataset.

Figure 2

|

Figure 2 Sub-Classes of Benign Tumor Images Sharma et al. (2020) |

Figure 3

|

Figure 3 Sub-Classes of

Malignant Tumor Images Sharma et al. (2020) |

Table 1

|

Table 1 BreaKHis Dataset |

||||||

|

Class |

Types |

Abb. |

40X |

100X |

200X |

400X |

|

Adenosis |

A |

114 |

113 |

111 |

106 |

|

|

Benign |

Fibro |

F |

253 |

260 |

264 |

237 |

|

Tubular |

TA |

109 |

121 |

108 |

115 |

|

|

Phyllodes |

PT |

149 |

150 |

140 |

130 |

|

|

Ductal |

DC |

864 |

903 |

896 |

788 |

|

|

Malignant |

Lobular |

LC |

156 |

170 |

163 |

137 |

|

Mucinous |

MC |

205 |

222 |

196 |

169 |

|

|

Papillary |

PC |

145 |

142 |

135 |

138 |

|

|

Total |

1995 |

2081 |

2013 |

1820 |

||

Both datasets are relevant to breast cancer studies, providing a comprehensive foundation for developing and testing machine learning algorithms. The BACH dataset focuses on multi-class classification of tissue images, while the BreaKHis dataset offers a detailed view at multiple magnification levels. This combined dataset enables detailed methodology for identifying breast cancer based on the unique properties and issues associated with each one.

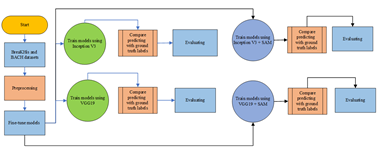

4. METHODS

The current work encompasses the two most promising CNN

architectures for classifying breast histology images: Inception V3 Szegedy

et al. (2016)and VGG 19 Simonyan

et al. (2014). Novel implementations

using spatial attention mechanisms have been incorporated into the models to

ensure selective focus on regions in the histological images that contain

maximum information. Therefore, this work represents the following steps: data

pre-processing, model training, the addition of spatial attention mechanisms,

model validation, and performance evaluation. A representation of the block

diagram of the proposed methodology is shown in Figure 4

Figure 4

|

Figure 4 Flowchart of the Proposed Model |

4.1. DATA PREPROCESSING

Data preprocessing is one of the most critical stages of all training processes; it puts every input in as correct a structure and quality as possible. Specifically, the present work resized the input image to fit the dimensions, namely 299 by 299 pixels in Inception V3 and 224 by 224 pixels in VGG19. It further normalizes the input data, as required by the actual training of a CNN. Other techniques applied include increasing the size of the dataset through data augmentation and introducing variability artificially. Techniques used in data augmentation include rotating, flipping, and scaling. This helps alleviate the overfitting problem since every model sees enough variance to be good at generalization.

4.2. INCEPTION V3

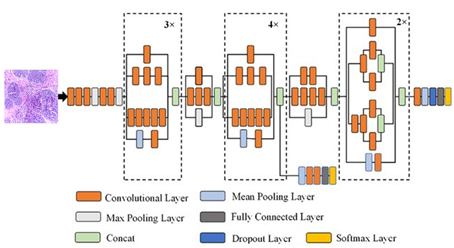

Inception V3 is among the most popular models for image recognition based on deep learning. The Google Brain team developed this model, an advanced version of the already developed Inception architecture, namely Inception V1 and V2. The Inception V3 development focused on improving efficiency and accuracy in classifying images with innovations introduced into its network structure. Probably the most essential novelty introduced in Inception V3 compared to the earlier versions is a more efficient way of convolution factorization: Instead of using one oversized convolution filter, it splits into several smaller ones—much more accessible to the process. For example, a 5x5 convolution gets factored into two consequent 3x3 convolutions, which reduces the number of needed parameters and computations. Besides this, Inception V3 involves a separate convolution to separate the spatial and channel operations for computation and memory efficiency Szegedy et al. (2016)Several of these layers constitute an Inception V3 structure, each with a specific purpose for handling the image. Figure 5 shows the overall architecture of the Inception V3 model structure, clearly describing how all the layers are connected and how information flow has been achieved from input to the final output.

In this regard, the Inception V3 model has secured striking performance in many image classification tasks and is also applied in practical domains related to disease diagnosis by image—for example, histopathological image classification in diagnosing breast cancer. Keeping the feature capturing capacity at different scales, the model is computationally more efficient than its precursors.

Figure 5

|

Figure 5 Inception V3 Architecture Ali

et al. (2021) |

4.3. VGG19

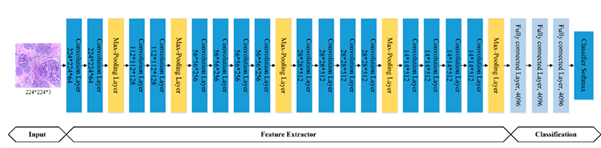

VGG 19 is one of the famous deep learning models, and it is often used when a task has something to do with image recognition. The VGG 19 model was presented by the University of Oxford Visual Geometry Group team. In 2014, Simonyan and Zisserman introduced it in the challenge of competition in ImageNet Large Scale Visual Recognition (ILSVRC) Simonyan et al. (2014). This VGG 19 architecture can be regarded as more profound than the VGG 16 architecture. VGG 19 convolution layers are 19 and are fully connected; such architecture design aims to achieve high accuracy in image classification. Small 3×3 filters on all the convolutional layers are the main innovation in developing the architecture in VGG 19. This will allow a model with more layers to be facilitated but with fewer exploding parameters; the implication is that the model will obtain more intricate features from the image. Another advantage is that such a small filter network makes computation efficient and reduces generalization. The structure VGG 19 has main layers important in processing an image. Figure 6shows the model's architecture.

Figure 6

|

Figure 6 VGG 19 Architecture

Ali et al. (2021) |

VGG 19 is very powerful for almost any image classification task. For real-life applications, various utilizations can be found in image-based medical diagnostics, such as histopathological image classification when dealing with breast cancer. It may be more parameterized than some of the newer models, but VGG 19 is still famous for being straightforward and reliable in providing high accuracy. Inception V3 and VGG19 are Returned, incorporating spatial attention mechanisms to focus on the relevant anatomical regions of breast cancer images, aiming for better classification accuracy.

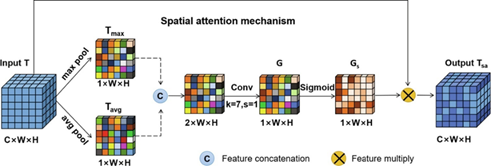

4.4. SPATIAL ATTENTION

Spatial attention mechanism (SAM) is one of the profound concepts that enhance performance towards the critical areas within an image for better performance on diverse tasks involved with the processing of images. This evolution of spatial attention-based models is motivated by an imperative need to emulate human attention mechanisms. That is because, during visual attention, concentration tends to be on more informative and relevant areas within a scene. It has been successful in several applications, such as object detection, image segmentation, and image classification. This can be achieved as the model modulates spatial feature representation in the input image to look at parts of an image that are more relevant for some activities than others. The general process occurs through an attention map provided by feature representations obtained from convolutional networks. The attentional map weighs the spatial features so that important ones will be highlighted and less relevant features suppressed Guo et al. (2022).

Here are the basic equations involved in a typical spatial attention mechanism:

Let F be the input feature map (1)

F∈RC×H×W (1)

where C is the number of channels, H is the height, and W is the width.

Perform average pooling (2) and max pooling operations (3) along the channel axis to generate two 2D maps:

Favg=AvgPool(F)∈R 1×H×W (2)

Fmax=MaxPool(F)∈R 1×H×W (3)

Concatenate the pooled features (4) and apply convolution (5) to obtain the attention map:

Fconcat=[Favg;Fmax]∈R 2×H×W (4)

Ms(F)=σ(Conv2D(Fconcat)) ∈R 1×H×W (5)

Conv2D is a convolution operation with a kernel size of 1 X 1, and σ is the sigmoid activation function.

Multiply the attention map with the original feature map (6) to obtain the refined feature map:

F'=Ms(F)⊙F (6)

⊙ denotes element-wise multiplication.

These equations describe generating a spatial attention

map and applying it to the input feature map to enhance important spatial

features. This mechanism helps models focus more on relevant parts of the

image, improving performance on tasks like object detection and image

classification. The complete structure of the spatial attention model can be

seen in the model architecture image below. It shows how each layer is

connected and how the attention map modulates spatial features, as shown in Figure 7. Using spatial attention in deep learning

models has significantly improved various image-processing tasks. For example,

models integrating spatial attention mechanisms accurately detect objects in

complex and cluttered images. This approach also helps reduce the required

parameters because the model can more efficiently focus computational resources

on more relevant areas Yan

et al. (2020)

In medical applications, such as histopathological image classification for breast cancer diagnosis, spatial attention allows models to identify areas that indicate the presence of cancer cells more accurately. By emphasizing critical features and ignoring less important ones, models can achieve higher levels of accuracy and assist pathologists in making more informed diagnostic decisions Cheng et al. (2021)

Figure 7

|

Figure 7 Spatial Attention Architecture

Ding et al. (2021) |

4.5. MODEL INITIALIZATION

The initialization of the models involved loading the pre-trained weights from the ImageNet dataset into Inception V3 and VGG19. These weights are a good initialization, as powerful feature extraction is developed during pre-training on ImageNet. A few changes were made in the last layers to match the number of classes in the Bach and BreaKHis datasets. This would cover such modifications whereby the original fully connected layers for 1000 classes designed at ImageNet would be replaced with those relevant to binary classification between benign and malignant or multi-classes, depending on the dataset. This will, therefore, ensure that the models are aptly adapted to differentiate histology concerning the various images of breast cancer.

4.6. TRAINING CONFIGURATION

Training configuration ensured the model performed well and gave a robust performance. A five-fold cross-validation approach was applied to test the models' performance. It divides the dataset into five parts; four subparts have the fold used for training, with the remaining part kept for validation. This is repeated five times, so each subgroup gets a chance to be the validation set. Cross-validation provides an excellent estimate of the model's performance without overfitting and without bias on results due to some data split.

The optimizer applied here is the stochastic gradient descent optimizer. It is highly efficient for all deep-learning tasks related to significant and high-dimensional data. The learning rate scheduler updates the learning rate dynamically during training. Dynamic changes in learning rates are essential to balance convergence speed and stability. However, a high learning rate quickly speeds up the convergence, and one may shoot past the optimum. Then, the learning rate decays gradually with time in a fine-tuning manner of the model so that it does not decay too fast or too slow. Learning rate scheduling helps optimize this trade-off using a low learning rate that gives stability but can slow the convergence.

Early stopping was used, too, to prevent overfitting. It's a technique that watches the loss on the validation set during training. If it doesn't grow during some period of epochs, then training stops. In this way, early stopping does not overfit the model to the training data too much, which would result in degraded performance on new, unseen data.

4.7. INCORPORATION OF SPATIAL ATTENTION

By incorporating spatial attention mechanisms into the Inception V3 and VGG19 models, several ways could improve their classification performance for histopathological images of breast tissue. In particular, it should refine feature maps such that spatial information—important in medical imaging, where subtle patterns indicate pathology—would be highlighted.

Inception V3 uses spatial attention to enhance output refinements post-certain Inception modules. It performs average and max pooling on the outputs, concatenates the results, and finally uses 1×1 convolutions with sigmoid activation to generate an attention map. This map attributes weight to the original feature map to boost critical features and suppress less important ones.

On the other hand, VGG19 architecture considers the deep computational layers and is capable of a spatial attention add-on right after the convolution blocks. Attention maps are generated at the pooling and convolution steps, refining output from the blocks. The models can substantially separate benign tissue patterns from malignant ones by more precisely delineating features and managing noises. Spatial attention could be seen as an approach for this family of models to enhance their classification accuracy based on breast histopathological images and, hence, more effectively diagnose and treat patients.

4.8. TRAINING EXECUTION

The cross-validation is run for 50 epochs for each fold, with a batch size of 32. The balance between these parameters for 50 epochs gives the model ample time to grasp features while not developing over-fitting. A batch size of 32 was selected in such a way as to maintain stable estimates of gradients efficiently within memory constraints. Model parameters will be iteratively updated at each epoch using the SGD optimizer. It updates the learning rate scheduler on the fly concerning validation performance and slowly reduces it to fine-tune the model. Subsequently, performance in each fold was critically evaluated against a set of metrics that included accuracy, precision, recall, and F1 score. Although accuracy defines the overall correctness of predictions made by a model, precision and recall are represented in models characterized by exactness while predicting positive cases and avoiding false positives, respectively. The F1-score is the harmonic mean of precision and recall. It gives the model's performance, especially when handling imbalanced data with unequal samples in number between two classes: positive samples and negative samples. The AUC for a model provides an ROC curve to establish all possible ways of setting thresholds in distinguishing classes for diagnostic performance.

4.9. EVALUATION METRICS

The models' performance was evaluated using several key metrics: accuracy, precision, recall, and F1-score. These metrics comprehensively assess the models' classification capabilities Abdulaal et al. (2024)., Abdulwahhab (2024).

4.9.1. ACCURACY

Accuracy (7) measures the proportion of correctly classified instances out of the total instances.

Accuracy=(tp+tn)/total samples (7)

where TP is true positive, TN is true negative, FP is false positive, and FN is false negative.

4.9.2. PRECISION

Precision (8) indicates the proportion of true positive predictions among all positive predictions.

Precision=tp/(tp+fp) (8)

4.9.3. RECALL

Recall (sensitivity) (9) measures the proportion of true positive instances among all actual positive instances.

Sensitivity=tp/(tp+fn) (9)

4.9.4. F1-SCORE

The F1-score (10) is the harmonic mean of precision and recall, balancing the two metrics.

F1 score=2 * (precision * sensitivity) / (precision + sensitivity) (10)

4.9.5. SPECIFICITY

Specificity (11) is the ability of a model to identify negative cases correctly

Specificity=tn/(tn+fp)(11)

These metrics were calculated for each fold in the 5-fold cross-validation, ensuring a robust evaluation of the models' performance on the breast cancer classification task.

5. RESULTS AND DISCUSSION

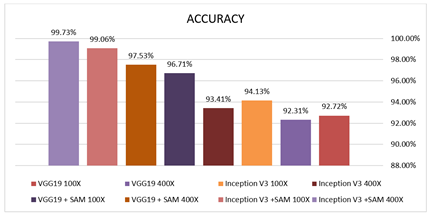

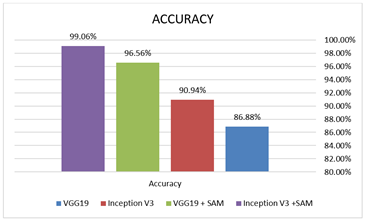

This study evaluates the performance of the Inception V3 and VGG19 models enhanced with spatial attention mechanisms on breast cancer histology image classification tasks using 5-fold cross-validation on the Bach and BreaKHis datasets. Tables 2 and 3 compare critical performance metrics (accuracy, sensitivity, specificity, precision, and F1-score) between models with and without a spatial attention mechanism for binary and multiclass classification. Also, Figure 8 and Figure 9 visually compare the performance metrics for both models with and without the spatial attention mechanism, providing a clear picture of the improvements achieved.

Table 2

|

Table 2 Evaluation Performances

for Binary Classification (Breakhis Dataset) |

|||||||

|

N |

CNN Type |

Factors |

Accuracy |

Sensitivity |

Specificity |

Precision |

F1 Score |

|

1 |

VGG19 |

40X |

92.23% |

85.61% |

95.51% |

90.40% |

87.94% |

|

100X |

92.72% |

88.03% |

95.07% |

89.93% |

88.97% |

||

|

200X |

93.05% |

88.19% |

95.29% |

89.60% |

88.89% |

||

|

400X |

92.31% |

87.50% |

94.67% |

88.98% |

88.24% |

||

|

2 |

Inception V3 |

40X |

94.74% |

90% |

97.03% |

93.6 |

91.77% |

|

100X |

94.13% |

90.14% |

96.13% |

92.09% |

91.10% |

||

|

200X |

94,04% |

89.76% |

96.01% |

91.20% |

90.48% |

||

|

400X |

93.41% |

89.17% |

95.49% |

90.68% |

89.92% |

||

|

3 |

VGG19 + SAM |

40X |

97.49% |

95.28% |

98.53% |

96.80% |

96.03% |

|

100X |

96.71% |

94.33% |

97.89% |

95.68% |

95% |

||

|

200X |

96.03% |

92.91% |

97.46% |

94.40% |

93.65% |

||

|

400X |

97.53% |

96.58% |

97.98% |

95.76% |

96.17% |

||

|

4 |

Inception V3 +SAM |

40X |

99.25% |

98.41% |

99.63% |

99.20% |

98.80% |

|

100X |

99.06% |

98.56% |

99.30% |

98.56% |

98.56% |

||

|

200X |

98.76% |

98.39% |

98.92% |

97.60% |

97.99% |

||

|

400X |

99.73% |

100% |

99.60% |

99.15% |

99.57% |

||

Figure 8

|

Figure 8 Accuracy for Binary

Classification |

Table 3

|

Table 3 Evaluation Performances

for Multi-Class Classification (BACH Dataset) |

||||||

|

N |

CNN Type |

Accuracy |

Sensitivity |

Specificity |

Precision |

F1 Score |

|

1 |

VGG19 |

86.88% |

86.88% |

95.63% |

86.88% |

86.89% |

|

2 |

Inception V3 |

90.94% |

90.97% |

96.98% |

90.94% |

90.97% |

|

3 |

VGG19

+ SAM |

96.56% |

96.56%% |

98.86% |

96.56% |

96.56% |

|

4 |

Inception V3 +SAM |

99.06% |

99.08% |

99.69% |

99.06% |

99.06% |

Figure 9

|

Figure 9 Accuracy for Multiclass

Classification |

The results of the training procedure provide information on the extent to which spatial attention mechanisms improved the performance of models Inception V3 and VGG19. This means that the integration of spatial attention was intended to enhance the diagnostic accuracy of the models by enabling focused attention on the critical areas within histology images to compare the different performance metrics of the models with and without spatial attention layers. Preliminary results showed that the spatial attention mechanisms elevated the performance of the models in terms of accuracy and provided a better balance of precision and recall compared to similar models without attention layers. Indeed, the attention-enhanced models showed much promise in revealing those subtle features within histology images, which declare malignancy an essential requirement for any breast cancer diagnosis. Further ROC analyses showed that attention mechanisms contributed to better class separability, thus improving overall performance.

The results showed that incorporating spatial attention mechanisms significantly enhanced the performance of both models. It increases the accuracy of Inception V3 with a spatial attention mechanism to an average of 99.73%, while for VGG19, it is 97.53%. With the spatial attention mechanism, the accuracy of the models is considerably higher: 93.41% for Inception V3 and 92.31% for VGG19. This increase in accuracy with the spatial attention mechanism, therefore, supports the idea that it enables the model to focus on essential regions inside the histology image pertinent to diagnosis and, as a result, yield better discrimination between healthy versus non-healthy tissue. Inception V3 with spatial attention achieves a precision of 99.15%, compared to 90.68% without spatial attention. On the other hand, when there is spatial attention with VGG19, the precision rose to 95.76% compared to 88.98% where there was no spatial attention. An increase in precision is significant because it shows that the model is more accurate in pinpointing cancer cells, hence reducing the false positives, which can result in wrong diagnoses and many other effects related to putting patients through unnecessary worry. Sensitivity increased drastically. The spatial attention improved the recall for Inception V3 from 89.17% to 100% while improving VGG19 from 87.50% to 96.58%. A higher recall indicates that a model is doing better in finding all the cancer cases that may turn fatal if not treated on time. Another significant measure is the F1-score; it is the harmonic average of precision and recall and has also shown considerable improvement. Inception V3 with spatial attention reached an F1-score of 99.57% from 89.92%, while VGG19 reached 96.17% from 88.24%. This improvement means that the models with spatial attention mechanisms are more accurate in their predictions, showing a better balance between detecting true positive cases and reducing errors.

Implementing spatial attention mechanisms in deep learning models also conveys advantages regarding interpretability Abdulwahhab et al. (2024) Attention maps allow pathologists to see which areas of the image the model is focusing on, providing extra insights that can be used to validate the results of an automated diagnosis. This increases confidence in automated detection systems and enables their broader application in clinical practice.

The overall results of this study demonstrate that incorporating the mechanism of spatial attention into the architecture of both Inception V3 and VGG19 significantly enhances the model's ability to detect breast cancer from histology images. This improves accuracy and precision and provides a more reliable and interpretable tool for pathologists to analyze breast cancer histology images. This would allow for broader diffusion in health systems to make diagnoses speedier and more accurate, ultimately benefiting the care and outcomes of the patients.

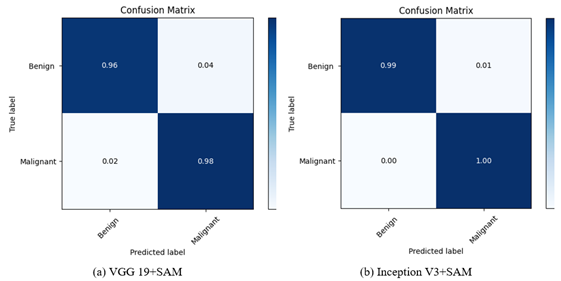

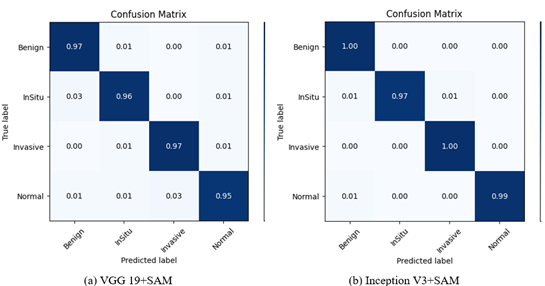

5.1. CONFUSION MATRIX

In binary classification, the confusion matrix is usually used to summarize the true positives, false positives, true negatives, and false negatives in assessing the model's performance. It is also applied in multi-class classification to compare predicted versus actual classes across multiple categories. It highlights correctly and misclassified instances Aldakhil et al. (2024) as shown in Table 4, Table 5 and Figure 10 and Figure 11

Table 4

|

Table 4 Confusion Matrices for Binary Classification (BreaKHis dataset) |

|||||||

|

N |

CNN Type |

Factors |

TP |

FP |

FN |

TN |

A% |

|

1 |

VGG19 |

40X |

113 |

12 |

19 |

255 |

92.23% |

|

100X |

125 |

14 |

17 |

270 |

92.72% |

||

|

200X |

112 |

13 |

15 |

263 |

93.05% |

||

|

400X |

105 |

13 |

15 |

231 |

92.31% |

||

|

2 |

Inception

V3 |

40X |

117 |

8 |

13 |

261 |

94.74% |

|

100X |

128 |

11 |

14 |

273 |

94.13% |

||

|

200X |

114 |

11 |

13 |

265 |

94.04% |

||

|

400X |

107 |

11 |

13 |

233 |

93.41% |

||

|

3 |

VGG19

+ SAM |

40X |

121 |

4 |

6 |

268 |

97.49% |

|

100X |

133 |

6 |

8 |

279 |

96.71% |

||

|

200X |

118 |

7 |

9 |

269 |

96.03% |

||

|

400X |

113 |

5 |

4 |

242 |

97.53% |

||

|

4 |

Inception

V3 +SAM |

40X |

124 |

1 |

2 |

272 |

99.25% |

|

100X |

137 |

2 |

2 |

285 |

99.06% |

||

|

200X |

122 |

3 |

2 |

276 |

98.76% |

||

|

400X |

117 |

1 |

0 |

246 |

99.73% |

||

Table 5

|

Table 5 Confusion Matrices for Multi-Class Classification (BACH dataset) |

||||||

|

N |

CNN Type |

Benign |

InSitu |

Invasive |

Normal |

A% |

|

1 |

VGG19 |

86 |

4 |

4 |

1 |

|

|

3 |

71 |

5 |

3 |

86.88% |

||

|

5 |

2 |

70 |

7 |

|||

|

4 |

3 |

1 |

69 |

|||

|

2 |

Inception

V3 |

71 |

2 |

3 |

1 |

|

|

3 |

74 |

3 |

2 |

90.94% |

||

|

4 |

1 |

73 |

4 |

|||

|

2 |

3 |

1 |

73 |

|||

|

3 |

VGG19

+ SAM |

78 |

2 |

0 |

1 |

|

|

1 |

77 |

1 |

1 |

96.56% |

||

|

0 |

0 |

78 |

2 |

|||

|

1 |

1 |

1 |

76 |

|||

|

4 |

Inception

V3 +SAM |

80 |

1 |

0 |

1 |

|

|

0 |

78 |

0 |

0 |

99.06% |

||

|

0 |

1 |

80 |

0 |

|||

|

0 |

0 |

0 |

79 |

|||

Figure 10

|

Figure 10 Confusion Matrices for 400X

(Binary) |

Figure 11

|

Figure 11 Confusion Matrices (Multiclass) |

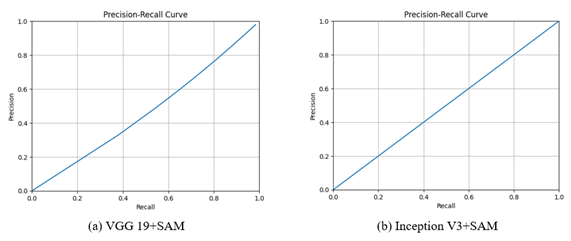

5.2. PRECISION-RECALL CURVES

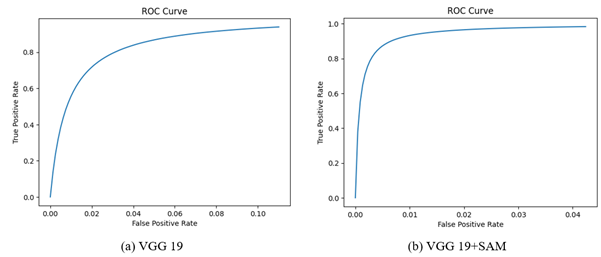

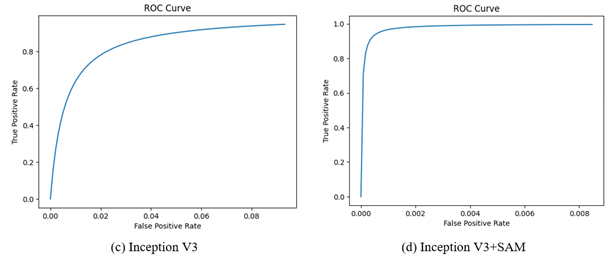

Precision-Recall and ROC curves are vital tools for evaluating classification model performance. The Precision-Recall curve shows how much precision and recall change concerning different decision thresholds, as shown in Figure 12. On the other hand, the ROC is a plot of the trade-offs in true positive rates against the false positive rate at different thresholds, as shown in Figure 13 Both the curves in isolation provide insights into a model's potential for discriminating between classes and help choose a proper threshold for classification tasks.

Figure 12

|

Figure 12 Precision-Recall Curves for

400X |

Figure 13

|

Figure 13 ROC curves for 400X |

6. CONCLUSION

This study explored advanced techniques for breast Histopathological Classification by utilizing sophisticated convolutional neural network architectures such as Inception V3 and VGG19. Integrating spatial attention mechanisms enhances the models' capacity to identify crucial regions within histology images, improving diagnostic accuracy. The research highlights the significance of comprehensive datasets in training and evaluating model performance by utilizing the Bach dataset for multiclass classification and the BreaKHis dataset for binary classification. Significantly, InceptionV3 with spatial attention mechanism achieved notable accuracies of 99.73% for binary classification and 99.06% for multiclass classification, demonstrating the potential impact of such integrations on automated breast cancer detection systems. These advancements promise to enhance early diagnosis and treatment planning, ultimately improving patient outcomes. This study sets the stage for substantial progress in medical image analysis by fusing state-of-the-art CNN architectures with attention mechanisms. The results underscore the pivotal role of technology in advancing healthcare practices, emphasizing the transformative influence that cutting-edge algorithms can have on breast cancer detection.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Abdulaal, A. H., Dheyaa, N. H., Abdulwahhab, A. H., Yassin, R. A., Valizadeh, M., Albaker, B. M., & Mustaf, A. S. (2024). Deep Learning-based Signal Identification in Wireless Communication Systems: a Comparative Analysis on 3G, LTE, and 5G Standards. Al-Iraqia Journal for Scientific Engineering Research , 3(3), 60-70. https://doi.org/10.58564/IJSER.3.3.2024.224

Abdulaal, A. H., Valizadeh, M., AlBaker, B. M., Yassin, R. A., Amirani, M. C., & Shah, A. F. M. S. (2024). Enhancing Breast Cancer Classification Using a Modified GoogLeNet Architecture with Attention Mechanism. Al-Iraqia Journal of Scientific Engineering Research, 3(1). https://doi.org/10.58564/ijser.3.1.2024.145

Abdulaal, A. H., Valizadeh, M., Amirani, M. C., & Shahen Shah, A. F. M. (2024). A self-learning Deep Neural Network for Classification of Breast Histopathological Images. Biomedical Signal Processing and Control, 87(Part B), 105418. https://doi.org/10.1016/j.bspc.2023.105418

Abdulwahhab, A. H., A. H. Abdulaal, Assad, A. A. Mohammed, & M. Valizadeh. (2024). Detection of Epileptic Seizure Using EEG Signals Analysis Based on Deep Learning Techniques. Chaos, Solitons & Fractals/Chaos, Solitons and Fractals, 181, 114700-114700. https://doi.org/10.1016/j.chaos.2024.114700

Aldakhil, L, H. Alhasson, & Alharbi, S. (2024). Attention-Based Deep Learning Approach for Breast Cancer Histopathological Image Multi-Classification. Diagnostics, 14(13), 1402-1402. https://doi.org/10.3390/diagnostics14131402

Ali, L., Alnajjar, F., Jassmi, H. A., Gocho, M., Khan, W., & Serhani, M. A. (2021). Performance Evaluation of Deep CNN-Based Crack Detection and Localization Techniques for Concrete Structures. Sensors, 21(5), 1688. https://doi.org/10.3390/s21051688

Araújo, T., Aresta, G., Castro, E., Rouco, J., Aguiar, P., Eloy, C., Polónia, A., & Campilho, A. (2017). Classification of Breast Cancer Histology Images Using Convolutional Neural Networks. PLOS ONE, 12(6), e0177544. https://doi.org/10.1371/journal.pone.0177544

Balasubramanian, A. A, Awad, M., Singh, A., Breggia, A., Ahmad, B., Christman, R., Ryan, S. T., & Saeed Amal. (2024). Ensemble Deep Learning-Based Image Classification for Breast Cancer Subtype and Invasiveness Diagnosis from Whole Slide Image Histopathology. Cancers, 16(12), 2222-2222. https://doi.org/10.3390/cancers16122222

Cheng, Z., Qu, A., & He, X. (2021). Contour-aware Semantic Segmentation Network with Spatial Attention Mechanism for Medical Image. The Visual Computer, 38(3), 749-762. https://doi.org/10.1007/s00371-021-02075-9

Das, S., & Fabia, M. (2024). Binary Classification of Breast Tumours Using CBAM-Enhanced Collaborative Network. 2024 3rd International Conference on Advancement in Electrical and Electronic Engineering (ICAEEE), 1-6. https://doi.org/10.1109/icaeee62219.2024.10561843

Ding, Q., Shao, Z., Huang, X., & Altan, O. (2021). DSA-Net: a Novel Deeply Supervised attention-guided Network for Building Change Detection in high-resolution Remote Sensing Images. International Journal of Applied Earth Observation and Geoinformation, 105, 102591. https://doi.org/10.1016/j.jag.2021.102591

Elmore, J. G., Longton, G. M., Carney, P. A., Geller, B. M., Onega, T., Tosteson, A. N. A., Nelson, H. D., Pepe, M. S., Allison, K. H., Schnitt, S. J., O'Malley, F. P., & Weaver, D. L. (2015). Diagnostic Concordance among Pathologists Interpreting Breast Biopsy Specimens. JAMA, 313(11), 1122-1132. https://doi.org/10.1001/jama.2015.1405

Giaquinto, A. N., Sung, H., Miller, K. D., Kramer, J. L., Newman, L. A., Minihan, A., Jemal, A., & Siegel, R. L. (2022). Breast Cancer Statistics, 2022. CA: A Cancer Journal for Clinicians, 72(6). https://doi.org/10.3322/caac.21754

Guo, D., Lin, Y., Ji, K., Han, L., Liao, Y., Shen, Z., Feng, J., & Tang, M. (2024). Classify Breast Cancer Pathological Tissue Images Using Multi-Scale Bar Convolution Pooling Structure with Patch Attention. Biomedical Signal Processing and Control, 96, 106607-106607. https://doi.org/10.1016/j.bspc.2024.106607

Guo, M.-H., Xu, T.-X., Liu, J.-J., Liu, Z.-N., Jiang, P.-T., Mu, T.-J., Zhang, S.-H., Martin, R. R., Cheng, M.-M., & Hu, S.-M. (2022). Attention Mechanisms in Computer vision: a Survey. Computational Visual Media, 8(3), 331-368. https://doi.org/10.1007/s41095-022-0271-y

Huang, S., Yang, J., Fong, S., & Zhao, Q. (2019). Artificial Intelligence in Cancer Diagnosis and prognosis: Opportunities and Challenges. Cancer Letters, 471(0304-3835). https://doi.org/10.1016/j.canlet.2019.12.007

Jadoon, E. K., F.G. Khan, S. A.Shah, Khan, A., & ElAffendi, M. (2023). Deep Learning-Based Multi-Modal Ensemble Classification Approach for Human Breast Cancer Prognosis. IEEE Access, 1-1. https://doi.org/10.1109/access.2023.3304242

Jasti, V. D. P., Zamani, A. S., Arumugam, K., Naved, M., Pallathadka, H., Sammy, F., Raghuvanshi, A., & Kaliyaperumal, K. (2022). Computational Technique Based on Machine Learning and Image Processing for Medical Image Analysis of Breast Cancer Diagnosis. Security and Communication Networks, 2022, e1918379. https://doi.org/10.1155/2022/1918379

Kausar, T., Lu, Y., & Kausar, A. (2023). Breast Cancer Diagnosis Using Lightweight Deep Convolution Neural Network Model. IEEE Access, 11, 124869-124886. https://doi.org/10.1109/access.2023.3326478

Litjens, G., Kooi, T., Bejnordi, B. E., Setio, A. A. A., Ciompi, F., Ghafoorian, M., van der Laak, J. A. W. M., van Ginneken, B., & Sánchez, C. I. (2017). A Survey on Deep Learning in Medical Image Analysis. Medical Image Analysis, 42(1), 60-88. https://doi.org/10.1016/j.media.2017.07.005

Liu, L., Wang, Y., Zhang, P., Qiao, H., Sun, T., Zhang, H., Xu, X., & Shang, H. (2024). Collaborative Transfer Network for Multi-Classification of Breast Cancer Histopathological Images. IEEE Journal of Biomedical and Health Informatics, 28(1), 110-121. https://doi.org/10.1109/jbhi.2023.3283042

Liu, Y., Chen, P.-H. C., Krause, J., & Peng, L. (2019). How to Read Articles That Use Machine Learning : Users' Guides to the Medical Literature. JAMA, 322(18), 1806-1816. https://doi.org/10.1001/jama.2019.16489

McKinney, S. M., Sieniek, M., Godbole, V., Godwin, J., Antropova, N., Ashrafian, H., Back, T., Chesus, M., Corrado, G. C., Darzi, A., Etemadi, M., Garcia-Vicente, F., Gilbert, F. J., Halling-Brown, M., Hassabis, D., Jansen, S., Karthikesalingam, A., Kelly, C. J., King, D., & Ledsam, J. R. (2020). International Evaluation of an AI System for Breast Cancer Screening. Nature, 577(7788), 89-94. https://doi.org/10.1038/s41586-019-1799-6

Moscalu, M, Moscalu, R., C. G. Dascălu, V. Ţarcă, Cojocaru, E., Costin, I., Țarcă, E., & I. L. Șerban. (2023). Histopathological Images Analysis and Predictive Modeling Implemented in Digital Pathology-Current Affairs and Perspectives. Diagnostics, 13(14), 2379-2379. https://doi.org/10.3390/diagnostics13142379

Raaj, R. S. (2023). Breast Cancer Detection and Diagnosis Using Hybrid Deep Learning Architecture. Biomedical Signal Processing and Control, 82, 104558. https://doi.org/10.1016/j.bspc.2022.104558

Rahman, M., Deb, K., P. K. Dhar, & Shimamura, T. (2024). ADBNet: an Attention-Guided Deep Broad Convolutional Neural Network for the Classification of Breast Cancer Histopathology Images. IEEE Access, 1-1. https://doi.org/10.1109/access.2024.3419004

Ritesh Maurya, Nageshwar Nath Pandey, Malay Kishore Dutta, & Mohan Karnati. (2024). FCCS-Net: Breast Cancer Classification Using Multi-Level Fully Convolutional-Channel and Spatial attention-based Transfer Learning Approach. Biomedical Signal Processing and Control, 94, 106258-106258. https://doi.org/10.1016/j.bspc.2024.106258

Sharma, S., Mehra, R., & Kumar, S. (2020). Optimised CNN in Conjunction with Efficient Pooling Strategy for the Multi‐classification of Breast Cancer. IET Image Processing, 15(4), 936-946. https://doi.org/10.1049/ipr2.12074

uder, E., McBride, R., & Sieh, W. (2019). Deep Learning to Improve Breast CancerShen, L., Margolies, L. R., Rothstein, J. H., Fl Detection on Screening Mammography. Scientific Reports, 9(1). https://doi.org/10.1038/s41598-019-48995-4

Simonyan, K., & Zisserman, A. (2014). Very Deep Convolutional Networks for large-scale Image Recognition. ArXiv Preprint ArXiv:1409.1556 .

Spanhol, F. A., Oliveira, L. S., Petitjean, C., & Heutte, L. (2016). A Dataset for Breast Cancer Histopathological Image Classification. IEEE Transactions on Biomedical Engineering, 63(7), 1455-1462. https://doi.org/10.1109/tbme.2015.2496264

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., & Wojna, Z. (2016). Rethinking the Inception Architecture for Computer Vision. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2818-2826. https://doi.org/10.1109/CVPR.2016.308

Ukwuoma, C. C., Hossain, M. A., Jackson, J. K., Nneji, G. U., Monday, H. N., & Qin, Z. (2022). Multi-Classification of Breast Cancer Lesions in Histopathological Images Using DEEP_Pachi: Multiple Self-Attention Head. Diagnostics, 12(5), 1152. https://doi.org/10.3390/diagnostics12051152

Xu, C., Yi, K., Jiang, N., Li, X., Zhong, M., & Zhang, Y. (2023). MDFF-Net: a multi-dimensional Feature Fusion Network for Breast Histopathology Image Classification. Computers in Biology and Medicine, 165, 107385. https://doi.org/10.1016/j.compbiomed.2023.107385

Yan, J., Peng, Z., Yin, H., Wang, J., Wang, X., Shen, Y., Stechele, W., & Cremers, D. (2020). Trajectory Prediction for Intelligent Vehicles Using Spatial‐attention Mechanism. IET Intelligent Transport Systems, 14(13), 1855-1863. https://doi.org/10.1049/iet-its.2020.0274

Zhou, Y., Zhang, C., & Gao, S. (2022). Breast Cancer Classification from Histopathological Images Using Resolution Adaptive Network. IEEE Access, 10, 35977-35991. https://doi.org/10.1109/access.2022.3163822

Zou, Y., Zhang, J., Huang, S., & Liu, B. (2021). Breast Cancer Histopathological Image Classification Using Attention High‐order Deep Network. International Journal of Imaging Systems and Technology, 32(1), 266-279. https://doi.org/10.1002/ima.22628

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhAI 2024. All Rights Reserved.