|

|

|

|

|

The Future of Recruitment: Evaluating AI – Based Screening Tools

Indrayani Yadav 1![]() ,

Dr. Priya Satsangi 2

,

Dr. Priya Satsangi 2

1 BBA,

Amity Business School Amity University Maharashtra, Mumbai, India

2 Associate

Professor, Amity Business School Amity University Maharashtra, Mumbai, India

|

|

ABSTRACT |

||

|

Artificial Intelligence (AI) has become a transformative force in modern recruitment, allowing companies to automate candidate screening, reduce time-to-hire, and process large applicant pools efficiently. Yet, despite these advantages, concerns about fairness, bias, transparency, and the loss of human touch continue to shape public perception. This research paper aims to evaluate the awareness, experiences, and attitudes of job seekers toward AI-based screening tools. A quantitative survey was conducted among 80 respondents from different educational and professional backgrounds. The results revealed that while 81% of respondents were aware of AI recruitment tools, trust levels remained moderate, and 85% still preferred some form of human involvement in hiring decisions. Participants recognized AI’s potential for improving efficiency but questioned its fairness and accountability. The findings

suggest that the future of recruitment should balance automation with empathy

and ethical oversight to ensure candidates are evaluated not only by

algorithms but also by people who understand human potential. |

|||

|

Received 12 Septmber2025 Accepted 20 October 2025 Published 24 November 2025 DOI 10.29121/ShodhAI.v2.i2.2025.52 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Recruitment, Artificial Intelligence, Screening

Tools, Fairness, Humanization, Ethical Hiring |

|||

1. INTRODUCTION

The recruitment landscape has undergone a significant transformation in the past decade, largely driven by advancements in Artificial Intelligence (AI). From automated resume screening and chatbots to video interview analysis powered by machine learning, AI-based tools are reshaping how organizations identify, evaluate, and select candidates. Companies today face overwhelming volumes of applications, and AI promises efficiency by filtering resumes, predicting performance, and even assessing soft skills through facial or linguistic analysis. According to industry reports, more than 60% of large organizations globally have already integrated AI in at least one stage of their hiring process.

However, with innovation comes uncertainty. Candidates often find themselves being judged by systems they do not fully understand, while recruiters grapple with questions about fairness, bias, and accountability. AI screening systems, though data-driven, are not free from human bias — they learn from historical hiring data, which may reflect existing inequalities. For example, if past hiring favored certain groups, the algorithm might unintentionally continue that pattern, reinforcing discrimination instead of eliminating it. This has raised ethical debates about whether AI enhances or undermines inclusivity in recruitment.

Moreover, the human experience of recruitment is changing. For many job seekers, applying for a role has become an impersonal process, lacking feedback and emotional connection. Candidates express discomfort when rejected by algorithms without explanation, leading to a growing demand for transparency and “human-in-the-loop” approaches. As workplaces emphasize diversity, equity, and inclusion, striking a balance between technological efficiency and human empathy becomes crucial.

This study explores how individuals perceive AI-based screening tools and whether they trust these systems to make fair hiring decisions. It aims to understand awareness levels, actual experiences with AI screening, and the degree to which people believe such tools should influence final hiring outcomes. By examining both the advantages and perceived drawbacks, the research seeks to answer a broader question — can the future of recruitment be both intelligent and humane?

2. LITERATURE REVIEW

Artificial Intelligence (AI) is revolutionizing recruitment practices across industries, offering data-driven solutions that promise speed, efficiency, and objectivity. AI-based screening tools are designed to assist employers in handling large applicant volumes by automating repetitive processes such as resume sorting, skill matching, and preliminary candidate assessments Upadhyay and Khandelwal (2018). These tools use algorithms and natural language processing to identify relevant keywords, evaluate qualifications, and predict candidate success. As companies increasingly adopt such systems, researchers and practitioners have begun to examine their implications for fairness, transparency, and overall candidate experience.

3. Evolution of AI in Recruitment

The introduction of AI into recruitment can be traced to the need for efficiency and accuracy in managing the hiring pipeline. Early systems, such as Applicant Tracking Systems (ATS), focused primarily on keyword matching and document parsing. Over time, with the advancement of machine learning and big data analytics, AI tools evolved into more sophisticated systems capable of analyzing behavioral traits, emotional cues, and video interviews. For instance, HireVue and Pymetrics use algorithms to evaluate candidate facial expressions, tone of voice, and game-based responses to predict job fit Tambe et al. (2019). These innovations aim to minimize human bias and enhance predictive accuracy, positioning AI as a potential game-changer in human resource management.

4. Efficiency and Organizational Benefits

Numerous studies emphasize the operational advantages of AI screening tools. They significantly reduce recruitment costs and time-to-hire, allowing HR departments to focus on strategic decision-making rather than administrative tasks Black and van (2020). Automated screening ensures that recruiters spend more time engaging with qualified candidates rather than sorting through irrelevant resumes. Additionally, AI tools can help identify patterns in candidate data, improve workforce planning, and enhance diversity by flagging potential underrepresented applicants. In theory, these benefits suggest that AI systems can foster more merit-based hiring when properly designed and implemented.

5. Bias and Ethical Concerns

Despite these benefits, ethical challenges have been widely documented. One major concern is algorithmic bias — when AI tools learn from biased historical data, they risk perpetuating existing inequalities in hiring Raghavan et al. (2020). For example, Amazon famously discontinued its AI recruitment program after discovering gender bias in its model, which downgraded resumes containing the word “women’s” or references to female institutions Dastin (2018). This case demonstrates that AI, while objective in structure, reflects the values and patterns embedded in the data it is trained on. Scholars argue that true fairness in AI recruitment depends on careful data auditing, ethical oversight, and continuous human monitoring.

Transparency is another critical issue. Candidates often do not understand how AI screening systems evaluate their applications or why they are rejected. Lack of feedback not only creates distrust but also contributes to candidate anxiety van and Black (2019). Moreover, limited regulatory frameworks mean that candidates have few legal rights to contest automated decisions, leading to concerns about accountability. The European Union’s General Data Protection Regulation (GDPR) has introduced provisions on algorithmic decision-making, emphasizing the “right to explanation,” yet global enforcement remains inconsistent.

6. Perceptions and Candidate Experience

Studies have also explored how candidates perceive AI-driven hiring processes. Research by Lee (2021) found that while applicants appreciate the speed and convenience of AI systems, they often feel alienated by the lack of personal interaction. Many respondents expressed a preference for blended approaches, where AI is used in early screening stages but final decisions remain human-led. Trust plays a key role — candidates who perceive AI as transparent and explainable are more likely to view it as fair Suen et al. (2019). On the other hand, when algorithms are seen as “black boxes,” skepticism increases, and organizational reputation may suffer.

Humanizing technology in recruitment has become an emerging discussion point. According to Langer et al. (2023), integrating empathy-driven communication, transparent scoring criteria, and feedback loops into AI-based processes can enhance the overall candidate experience. Organizations that provide clear explanations about how AI evaluates applicants tend to maintain stronger employer branding and candidate trust.

7. RESEARCH METHODOLOGY

For this study, I wanted to understand what job seekers really think about AI being used in hiring. So, I designed a simple online survey and shared it with students, recent graduates, and young professionals — the people most likely to face AI screening while job hunting. A total of 80 respondents participated, answering structured questions about their awareness, trust, fairness concerns, and preference for human involvement in recruitment. Since the goal was to collect opinions and measure how common each viewpoint is, I used a quantitative descriptive research design. The responses were then analyzed to see patterns in how people feel about AI-powered hiring tools. Overall, this method helped capture real perceptions directly from those experiencing the shift in recruitment technology.

7.1. RESEARCH GAP

Although extensive literature exists on the technical capabilities and ethical risks of AI recruitment tools, fewer studies have focused on public awareness, perceived fairness, and trust among job seekers — particularly in the Indian context. Most existing research is Western-centric, leaving a gap in understanding how diverse labor markets perceive these technologies. This study addresses that gap by empirically examining awareness, trust, and perceived fairness of AI-based screening among Indian respondents, providing insights into how humanization can shape the future of recruitment.

7.2. STATEMENT PROBLEM

In today’s competitive job market, organizations are increasingly adopting Artificial Intelligence (AI)-based screening tools to streamline recruitment and identify the best candidates quickly. While these tools offer benefits such as efficiency, cost reduction, and data-driven decision-making, they also raise critical ethical and human concerns. Many candidates feel uncertain about how AI algorithms evaluate their applications, often questioning the fairness, transparency, and presence of human bias in automated systems. Moreover, the lack of feedback after AI-driven rejections contributes to a sense of alienation among job seekers.

The problem, therefore, lies in understanding whether AI-based screening systems truly enhance recruitment effectiveness or compromise fairness and trust. There is a growing need to evaluate candidates’ awareness, perceptions, and acceptance of AI-driven recruitment tools. This study addresses this gap by investigating how individuals perceive AI-based screening, how much they trust these systems, and whether they still prefer human intervention in the final hiring process.

8. RESEARCH DESIGN

The present study adopts a descriptive quantitative research design to analyze the perceptions of individuals toward AI-based screening tools. A structured survey method was used to collect data, focusing on awareness, trust, fairness, and preferences related to AI recruitment.

Population and Sample

· The population of the study includes students, recent graduates, and early-career professionals who are potential job seekers.

· A sample of 80 respondents was selected using convenience sampling through online distribution methods such as Google Forms and email.

8.1. RESEARCH QUESTIONS

1) What percentage of respondents are aware of AI-based screening tools used in recruitment?

2) How many have personally experienced AI-based recruitment systems?

3) Do respondents trust AI screening tools to make fair decisions?

4) What impact do AI screening tools have on diversity and inclusion?

5) Do candidates prefer human involvement even when AI is used in the hiring process?

The bar graph shows that:

35 respondents have a moderate level of trust in AI screening tools.

20 respondents express high trust.

15 respondents show low trust.

10 respondents have no trust at all.

This pattern reveals a cautious optimism among participants. While most believe AI can improve hiring efficiency, there’s still skepticism regarding fairness, accuracy, and bias. The moderate-to-high trust levels suggest candidates are open to AI involvement, but transparency and ethical use remain key to gaining full confidence.

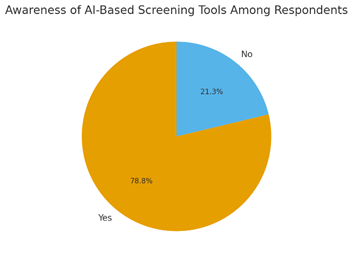

8.2. Interpretation of the Pie Chart

The chart shows that approximately 79% of respondents are aware that employers use AI-based tools during recruitment, while 21% are not.

This indicates a high level of awareness about AI-driven hiring processes, likely influenced by social media discussions, online job platforms, and exposure to digital hiring systems. However, the remaining segment highlights a knowledge gap, suggesting that not everyone fully understands how AI affects job applications — an important insight for HR professionals seeking transparency and candidate trust.

9. RESULT

In the research on “The Future of Recruitment: Evaluating AI-Based Screening Tools,” responses were collected from a sample size of 80 participants, largely comprising students, job seekers, and early career professionals. The data was collected through an online survey structured to analyse awareness, perception, and trust in AI-driven recruitment methods.

The pie chart on the awareness of AI-based screening tools shows that 79% of the respondents are aware that companies use AI-based systems to screen applicants, while 21% are not aware. This demonstrates that AI in recruitment has become a well-recognized phenomenon, especially among the young generation who actively use digital job platforms like LinkedIn and Indeed.

The bar graph of Trust Levels Toward AI-Based Screening Tools showed a mix. About 43% of the respondents-20 with high trust, 35 with moderate trust-think that AI is capable of bringing efficiency and fairness to recruitment by reducing human bias and working on volumes of applications quickly. As many as 31% of participants, comprising 15 with low trust and 10 with no trust, raised concerns on grounds of transparency, ethical implications, and algorithmic bias.

Qualitative responses further suggested that while AI is perceived as a time-saving innovation, candidates still value the “human touch” in hiring decisions. Many participants believe that emotional intelligence, communication skills, and personality fit cannot be fully measured through automated tools alone. Thus, AI should complement — not replace — human recruiters.

Overall, the findings highlight a growing awareness and cautious acceptance of AI in recruitment. Respondents acknowledge its advantages in streamlining processes but emphasize the need for accountability, explain ability, and fairness in how these tools are implemented.

10. CONCLUSION

This study concludes that AI-powered recruitment tools shape the future of hiring, but it is indeed all about striking a balance between technology and human judgment. A majority of respondents believe AI has the power to make hiring more efficient, reduce bias, and base decisions on data, but there is skepticism due to ethical dilemmas, lack of transparency, and the fear of depersonalization.

Fairness and inclusivity within such systems are paramount, and it is vital that developers ensure their systems mirror this in the workplace. Transparency with candidates on how AI works will help ease some fears about automated decision-making.

To put it succinctly, AI in recruitment is not a fad but a paradigm shift. The future of ethical and effective hiring, however, hinges on how well we can integrate machine intelligence with human empathy. A hybrid model seems to be the most sustainable version for the evolving workplace, wherein AI handles the data and humans handle the emotion

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Bogen, M., and Rieke, A. (2018). Help Wanted: An Examination of Hiring Algorithms, Equity, and Bias. Upturn.

Dastin, J. (2018). Amazon Scraps Secret AI Recruiting Tool That Showed Bias Against Women. Reuters.

Edwards, M. R., and Sutanto, J. (2021). Artificial Intelligence in Recruitment: A Review and Research Agenda. International Journal of Human Resource Management, 32(20), 4142–4173. https://doi.org/10.1080/09585192.2020.1835598

Jarrahi, M. H. (2018). Artificial Intelligence and the Future of Work: Human-AI Symbiosis in Organizational Decision Making. Business Horizons, 61(4), 577–586. https://doi.org/10.1016/j.bushor.2018.03.007

Upadhyay, A. K., and Khandelwal, K. (2018). Applying Artificial Intelligence: Implications for Recruitment. Strategic HR Review, 17(5), 255–258. https://doi.org/10.1108/SHR-07-2018-0051

Yarger, L., Cobb Payton, F., and Neupane, B. (2020). Algorithmic Recruitment: The Impact of Big Data on Hiring Decisions. Journal of Business Ethics, 163(2), 329–343. https://doi.org/10.1007/s10551-018-4056-2

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhAI 2025. All Rights Reserved.